Some time ago I finished Ray Kurzweil’s “How to create a mind, the secret for human thought”. The book is definitely worth reading, also for someone working in any sub domain of artificial intelligence.

Kurzweil recapitulates his work on artificial intelligence and relates it to the latest research on how minds – biological minds, to be more specific – work. I found funny he goes into some of the work Nuance is carrying out with medicine and semantics. I worked on some items from a company called Language & Computing which Nuance acquired several years ago.

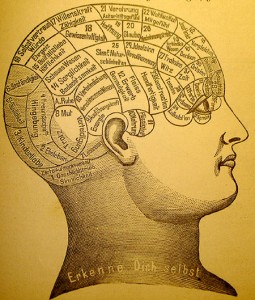

One of the chapters I liked the most was chapter 4, The Biological Cortex. I have read a few popularizing books on neurology and a few more technical articles about neurological research, but there were a few new items to me here. Chapter 5 is also pretty fascinating for me. Obviously, the neurological part is the one where I am an absolute layman. By all means this makes me want to read more about it.

Kurzweil states the basic units of the neocortex are modules composed of around one hundred neuros. According to him – and some other researchers – connections within each module are mostly inherited, they are based on our genes, whereas connections between modules are above all the product of experience, of training.

Chapter 7, the Biological Inspired Neocortex is the one with the most things where I wanted to gloss over, but I still read it through. Here I started to find more things I have my second thoughts about. Firstly, Kurzweil claims somehow that although “no human can claim to have mastered all of Wikipedia…[it] is only part of Watson’s knowledge base. Is it? I do not thin so. I have worked a lot with Wikipedia and have studied a zillion projects trying to garner as much new semantic information as possible in the most reliable way (a lot of these projects providing data for DBPedia, but a lot more to private companies) and I wouldn’t say we are even close to producing a “full” extraction of Wikipedia information. How would it even look like? Even if we are talking about a purely statistical analysis of Wikipedia pages: what does that mean? Statistics are, one way or the other, attached to a model, to a hypothesis. How many hypotheses can we create from any middle-size page of Wikipedia?

Kuzweil warns about how difficult the concept of consciousness is and how even the most rational scientists can get into the vaguest rumblings here. And yet, for me he fails to deliver here. He gets as fuzzy as anyone else. In that chapter 7 he goes over the Turing test and makes his predictions about when a computer will pass the Turing test. He discusses Searle’s Chinese room and argues that if if what Searle said about that room is true, “the human brain would not be judged capable of thinking either”.

Perhaps the issue I have is that I don’t agree even with what the Turing test is supposed to mean. We recently saw how the University of Reading competition went. When I read the dialogues that made a third of the judges believe they were dealing with a human being, I was surprised, to put it mildly, that anyone could fall for it. Now, we need to ask: what is the difference between the 1/3 who believed the bot was a human and the 2/3 who didn’t? I don’t think the answer is “they are less clever”. At least I think that cannot be the only explanation. I reckon the 1/3 of gullible people know less about what we know today of what kind of reactions we can automate.

I think consciousness, at least as we see it in humans, is very related to a very deep degree of self-reference, the existence of a system that is aware of itself, of itself being aware of itself and and so on recursively. If we relate this system to the idea of the Chinese room: human consciousness would be capable not only of acting as a Chinese room towards the external world, but of creating internal, virtual Chinese rooms it can analyse on itself and in itself, virtual Chinese rooms and other kinds of rooms it can think of.

All in all, although Kurzweil tends to exaggerate here and there, his writing is readable, he provide some interesting pieces of information for everyone and he contributes to the discussion about how we can build more intelligent computer systems.